#GPU Computing Assignment Help

Explore tagged Tumblr posts

Text

KRTRIM AND ASALEE

KRTRIM ("krih-trim") comes from the Hindi word कृत्रिम, which translates to "artificial." It is the informal term for computational phantoms, and though it originated as an adjective in its native language, it is now commonly used as both that and a noun in English.

Krtrim are described as artificial intelligences, though this definition differs from the modern computer science term. Operating a GPU unit which controls their body ("vessel"), krtrim are electric entities of Base 2. Their Base number comes from the fact they exist in binary, or literal base two. OURO does not disclose how exactly krtrim are created, but it is commonly accepted that they are built upon fragmented human Essences.

Krtrim can pilot several different vessels depending on their age or occupation. These vessels include:

Class-1A civilian models stand at roughly four and a half feet tall (137cm), though their exact height depends on the age of the krtrim piloting it. These vessels are given to juvenile krtrim until they reach 20KR.

Class-1B civilian models stand at five feet tall (152cm) and are given to adult krtrim non-eligible for occupational advancements. This is the most common civilian vessel.

Class-1C is a discontinued civilian model used exclusively for jinkōs. They stood at roughly five-foot-three (160cm) and are slimmer than traditional civilian models.

If a krtrim is considered eligible for occupational advancements ("oc-ads"), they can be assigned a vessel with greater physical prowess, etc., i.e. a construction worker could receive strength oc-ads to make them better equipped for their job. These fall under the umbrella of Class-2.

Class-3 models are reserved for combat-oriented occupations. Most notable are Tangent-era soldiers such as Bheema, who stand as tall as six-foot-five (198cm) and have considerable oc-ads.

All krtrim models use un-dyed skin overlays. Due to the color of their coolant, this gives them their distinct blue complexion. In addition to this, krtrim lack a full-body skin overlay like aslee models; to keep their joints from pinching or catching on their clothes, they wear a body suit. This suit also helps them maintain a proper operating temperature and keeps their elastics from fatiguing. Additionally, since krtrim models only differ in faceplate design from individual to individual, a permanent color ID is assigned to them.

ASALEE ("us-lee") comes from the Hindi word असली, which translates to "real." It is the informal term for humans, and though it originated as an adjective in its native language, it is now commonly used as both that and a noun in English.

Asalee are Base 4 as a result of the four nucleotide pairs in human DNA. The vessels asalee operate can either be human (the body they were born with) or posthuman if they have undergone consciousness transfer.

Asalee posthumans pilot Class-1D OURO models, and are highly customizable according to either their previous human appearance or however the individual wants to present. This includes dyed skin overlays, height adjustment, cosmetic alterations, and more. These models can look convincingly human, aside from their reflective pupils.

26 notes

·

View notes

Text

How to Onboard an Artificial Intelligence Developer?

Hiring an artificial intelligence developer is a significant milestone, but its success highly depends on the onboard process. Unlike vanilla software developers who can most often get into codebases and frameworks already established, artificial intelligence developers require access to distinctive resources, advanced tools, and broad context about business objectives in order to become effective contributors.

Preparing the Technical Foundation

The developer of AI needs timely access to good-quality data, which typically ends up being the biggest hindrance to AI projects. Smart companies begin planning data access, cleaning, and documentation well before the reporting date of the new employee. This includes the discovery of suitable datasets, outlining data governance procedures, and having set, actionable privacy and security requirements.

Computation resources are another important aspect of preparation. The AI builder requires more sophisticated equipment than a typical software engineer, like GPUs to operate models and sufficient storage to manage big data sets. Computation resources in the cloud must be provisioned and configured with adequate permissions and development environments validated to see if they are able to support the computational demands of machine learning workflows.

Access to specialized software and platforms must be prearranged. The artificial intelligence developer will likely need licenses for data science platforms, machine learning environments, and visualization tools that do not necessarily fit typical software development toolkits. These tools have special configurations and integrations that take time to set up correctly.

Setting Up Clear Project Context

The AI creator benefits enormously from understanding the broader business context in which they are operating., This goes beyond typical job descriptions to include explicit descriptions of how AI work fits into company strategy, what the most critical success measures are, and how their work will impact different groups throughout the firm.

Successful onboarding also includes extensive briefings about existing systems, data sources, and previous attempts at AI in the company. The AI developer needs to understand what was tried before, what worked, what failed, and why. Knowing what went on beforehand prevents duplicating efforts and enables them to learn from the past rather than needing to start anew.

Stakeholder mapping then becomes central to the success of the artificial intelligence developer. They need to understand who makes decisions on AI projects, who provides domain expertise, who controls data access, and who will eventually deploy their solutions. Introductions to most critical stakeholders as early as possible help form relationships that become invaluable as projects progress.

Establishing Collaborative Learning Opportunities

The tech developer, in most cases, has technical skills none of the current team possesses but needs to learn the domain-specific skills that only the internal stakeholders possess. Structured knowledge transfer sessions make these gaps easy to close, allowing the new entrant to share AI acumen while learning business process aspects, customer specifications, and the limitations of operation.

Pairing programs are ideal for artificial intelligence developers, but not always the pairing partner is another developer. Domain specialists, data analysts, or business stakeholders can be learning partners who help the AI developer understand context as they learn about AI capabilities themselves. Such relationships mostly evolve into long-term collaborations that boost project success.

Cross-functional project assignments during onboarding introduce the artificial intelligence developer to how the different departments of the organization operate and what kind of problems they face. Introduction to this environment sparks ideas about AI solutions and educates the developer on prioritizing tasks based on organizational applicability rather than technical interest alone.

Setting Realistic Expectations and Timelines

The AI creator faces unusual challenges not known by developers of conventional software. Machine learning projects are uncertain, experimental, and may fail even with the best attempt. Good onboarding is truthful discussion of these realities and establishes expectations that consider the iterative nature of AI development.

Timeline expectations should be properly calibrated onboarding. The AI developer should remember that initial projects may end up taking longer than intended due to quality issues with the data, unexpected technical issues, or the need for multiple experimental cycles. Setting realistic milestones avoids frustration and maintains the confidence of stakeholders.

Success metrics for the artificial intelligence developer must be established upfront and clearly communicated. They must balance technical achievement and business value, keeping in mind that significant AI work will not always generate quantifiable business outcomes immediately. Early success must be established and tackled to establish momentum and visibility.

Building Long-term Growth Architectures

The induction process by the developer should include discussions on career development and the opportunities to skill up. AI technology keeps evolving, and one must keep updating continuously through conferences, courses, and trying out new techniques and tools. Organizations that promote this continuous learning retain employees and remain at par technically.

Mentorship programs can be of immense value to developers of artificial intelligence, though one where mentors have to be drawn from outside of the immediate organization if not available within. Connecting new joiners with external AI communities, professional networks, and industry experts allows them to continue connected to broader developments in the field.

The AI professional must understand how their own job might evolve as the firm's AI capabilities come of age. This may involve potential to lead teams, influence strategy, or niche in a particular area. Having visible roads to progress retains talent and beckons long-term commitment to organizational objectives.

Measuring Onboarding Success

Effective onboarding of an artificial intelligence engineer should result in effective contribution within the first few months, but it is distinct from productivity in a traditional role. Early indications are effective exploratory data analysis, perhaps critical use cases revealed, and working relationships with key stakeholders established rather than lines of code written or features released.

0 notes

Text

“How RHosting Helps Developers Run Heavy Software on Lightweight Devices”

In an era of digital nomadism and lean tech stacks, many developers prefer lightweight laptops and minimal setups. But development often demands heavy-duty software—IDEs like Visual Studio, data analysis tools, virtual machines, container platforms, and more. The dilemma? Lightweight devices often can’t handle the workload.

That’s where RHosting’s smart RDP solution becomes a game-changer.

🧠 The Challenge: Power-Hungry Tools on Underpowered Hardware

Developers often face:

Limited CPU/RAM on personal machines

High-latency or lag with traditional remote tools

Inability to run resource-intensive IDEs, emulators, or databases locally

Security risks when transferring sensitive code or client data

This makes coding on the go—or even from home—frustrating and inefficient.

🚀 The RHosting Solution: Power in the Cloud, Access Anywhere

RHosting turns any lightweight laptop or tablet into a development powerhouse by offloading compute-intensive tasks to high-performance cloud Windows servers.

Here’s how it works:

⚙️ 1. High-Performance Cloud Servers

Spin up a cloud-based Windows environment with:

Multi-core CPUs

Generous RAM and SSD storage

Preinstalled dev tools or full customization

GPU-accelerated options (on request)

This means you can run VS Code, Android Studio, Docker, Jupyter Notebooks, or even data-heavy scripts without taxing your personal device.

🔐 2. Secure, Low-Latency Remote Access

Unlike traditional RDP, RHosting uses proprietary protocols optimized for real-time responsiveness and security:

Encrypted sessions with MFA

Fast frame rendering, even on low bandwidth

Clipboard, file transfer, and peripheral support

Developers can code, debug, and deploy as if they were sitting in front of a powerful desktop—from a Chromebook or tablet.

🛠 3. Application-Level Access (Only What You Need)

RHosting lets users access only specific apps or environments, reducing distractions and security risks.

Example: A front-end dev can access Figma, a browser, and VS Code. A data engineer gets Jupyter, Python, and data folders—nothing more.

📂 4. Folder-Level Permissions for Teams

Collaborating on a project? You can assign custom access to codebases, test environments, and shared folders—great for dev teams, agencies, or open-source contributors.

💡 Ideal Use Cases

Freelance developers working across devices

Students and coding bootcampers without high-end laptops

Remote teams collaborating on shared codebases

Enterprise developers with strict security policies

🧩 Real World: A Developer’s Day with RHosting

Log in from a thin-and-light laptop

Launch a remote Windows server with all dev tools preloaded

Run a heavy build or simulate a test environment

Save work to cloud storage, shut down server to save costs

Resume from any device—no sync issues, no lag

🎯 Conclusion: Light Device, Heavy Performance

With RHosting, your hardware no longer limits your coding potential. Whether you're building the next big app or debugging legacy systems, you can access enterprise-grade compute power securely from anywhere.

So if you’re tired of hearing your laptop fans scream every time you hit “build,” it’s time to switch to RHosting—because the smartest developers know where to offload their load.

0 notes

Text

Practice 1: Developing Skills

Developing 1 (Week 6)

Since, I don't want the lights to emit with the same values of brightness, I tweak them appropriately as needed. I also enable the 'Cast Shadows' tab so that the lights emitted cast shadows on the rounded cylinder.

Figure 1 (Tweaking light properties)

I also set my GPU for the denoise operation. This is sometimes a rookie mistake that even professionals end up doing. It is so simple and a given that sometimes people overlook it.

What it does is set the denoise operation to be calculated by your computer's GPU instead of the CPU. As the integrated graphics of the CPU is very weak compared to the dedicated graphics of the GPU your system is equipped with, you are better off setting all of the render and the mathematical calculations for your 3D work to GPU so that it works faster in real time and saves you a lot of time.

Figure 2 (Setting the operations to GPU)

I change the normal tab to a height tab in the material section of the rounded cylinder. This allows me to add the custom sculpted height map from ZBrush to the cylinder.

Figure 3 (Setting the normals to height)

I then assign all of the textures we exported from Substance Designer to their corresponding slots in the material tab. This includes the base color, the roughness, the metallic, the ambient occlusion and most importantly, the albedo base color.

Figure 4 (All of the available textures for the material)

Figure 5 (Textured Stone Slab)

At this point, most of the assignment is complete. The only little difference here is since we created a height map, we can increase the displacement value to inflate the tileable textures which helps it stand out more.

0 notes

Text

Shop Smooth Multitasking PCs in the UK with Savitar Technology Ltd

In today’s fast-paced world, multitasking is more than just a skill—it’s a necessity. Whether you’re a student juggling assignments and research, a professional navigating between video conferences and spreadsheets, or a gamer streaming your gameplay while chatting with friends, a reliable, high-performing PC is essential. That’s where Savitar Technology Ltd steps in, offering cutting-edge systems designed to redefine your computing experience. If you’re looking to Shop smooth multitasking PCs in uk, you’ve come to the right place.

Why Multitasking Demands the Best PCs

The demands of multitasking require a PC with the right combination of hardware and software. A smooth multitasking PC ensures you can switch seamlessly between applications without lag, crashes, or slowdowns. Here’s what makes these systems stand out:

Powerful Processors: Multitasking thrives on speed, and the latest multi-core processors ensure fast, efficient performance, enabling you to run several demanding applications simultaneously.

Ample RAM: With 16GB or more RAM, you can ensure smooth operation, even with multiple tabs, apps, and software open.

Fast Storage Solutions: Solid-State Drives (SSDs) ensure faster boot times and quicker access to files, giving you an uninterrupted flow.

Graphics Performance: If your tasks involve graphic design, video editing, or gaming, dedicated GPUs handle intensive visual workloads efficiently.

At Savitar Technology Ltd, we combine these essential features to bring you PCs that enhance productivity and provide a seamless user experience.

The Savitar Technology Ltd Promise

Savitar Technology Ltd is a name synonymous with quality, innovation, and customer satisfaction. Our goal is to cater to diverse computing needs across the UK by offering premium PCs that don’t just meet expectations—they exceed them. Here’s why we’re a trusted choice for multitasking PCs:

Custom-Built for Your Needs

No two users are the same, and neither should their PCs be. At Savitar Technology Ltd, we offer custom-built systems tailored to your specific requirements. Whether you’re an architect needing CAD software, a gamer seeking ultra-high performance, or an entrepreneur managing multiple business applications, we’ve got you covered.

Quality Assurance

We use only premium components to build systems that last. Each PC undergoes rigorous testing to ensure it can handle even the most demanding multitasking scenarios with ease. From hardware durability to software optimization, quality is at the core of everything we do.

Expert Support

Our commitment doesn’t end with your purchase. We offer comprehensive customer support to help you make the most of your new PC. Whether you need advice on system upgrades or troubleshooting assistance, our experts are just a call or email away.

Competitive Pricing

While we’re committed to quality, we also understand the importance of affordability. Savitar Technology Ltd offers top-tier multitasking PCs at competitive prices, ensuring you get exceptional value for your money.

For more details, you can visit us:

Order Luna Fast Gaming PC Bundle Online

Shop Luna Gaming PC Bundle Online

Best Luna Fast Gaming PC Bundle

#Sav i7 Flash Bundle with Dual Monitor#Best i7 Flash Fast Gaming PC Tower Online#Buy Online i7 Flash Fast Gaming PC Tower

0 notes

Text

Intel oneDPL(oneAPI DPC++ Library) Offloads C++ To SYCL

Standard Parallel C++ Code Offload to SYCL Device Utilizing the Intel oneDPL (oneAPI DPC++ Library).

Enhance C++ Parallel STL methods with multi-platform parallel computing capabilities. C++ algorithms may be executed in parallel and vectorized with to the Parallel Standard Template Library, sometimes known as Parallel STL or pSTL.

Utilizing the cross-platform parallelism capabilities of SYCL and the computational power of heterogeneous architectures, you may improve application performance by offloading Parallel STL algorithms to several devices (CPUs or GPUs) that support the SYCL programming framework. Multiarchitecture, accelerated parallel programming across heterogeneous hardware is made possible by the Intel oneAPI DPC++ Library (oneDPL), which allows you to offload Parallel STL code to SYCL devices.

The code example in this article will show how to offload C++ Parallel STL code to a SYCL device using the oneDPL pSTL_offload preview function.

Parallel API

As outlined in ISO/IEC 14882:2017 (often referred to as C++17) and C++20, the Parallel API in Intel oneAPI DPC++ Library (oneDPL) implements the C++ standard algorithms with execution rules. It provides data parallel execution on accelerators supported by SYCL in the Intel oneAPI DPC++/C++ Compiler, as well as threaded and SIMD execution of these algorithms on Intel processors built on top of OpenMP and oneTBB.

The Parallel API offers comparable parallel range algorithms that follow an execution strategy, extending the capabilities of range algorithms in C++20.

Furthermore, oneDPL offers particular iterations of a few algorithms, such as:

Segmented reduction

A segmented scan

Algorithms for vectorized searches

Key-value pair sorting

Conditional transformation

Iterators and function object classes are part of the utility API. The iterators feature a counting and discard iterator, perform permutation operations on other iterators, zip, and transform. The function object classes provide identity, minimum, and maximum operations that may be supplied to reduction or transform algorithms.

An experimental implementation of asynchronous algorithms is also included in oneDPL.

Intel oneAPI DPC++ Library (oneDPL): An Overview

When used with the Intel oneAPI DPC++/C++ Compiler, oneDPL speeds up SYCL kernels for accelerated parallel programming on a variety of hardware accelerators and architectures. With the help of its Parallel API, which offers range-based algorithms, execution rules, and parallel extensions of C++ STL algorithms, C++ STL-styled programs may be efficiently executed in parallel on multi-core CPUs and offloaded to GPUs.

It supports libraries for parallel computing that developers are acquainted with, such Boost and Parallel STL. Compute. Its SYCL-specific API aids in GPU acceleration of SYCL kernels. In contrast, you may use oneDPL‘s Device Selection API to dynamically assign available computing resources to your workload in accordance with pre-established device execution rules.

For simple, automatic CUDA to SYCL code conversion for multiarchitecture programming free from vendor lock-in, the library easily interfaces with the Intel DPC++ Compatibility Tool and its open equivalent, SYCLomatic.

About the Code Sample

With just few code modifications, the pSTL offload code example demonstrates how to offload common C++ parallel algorithms to SYCL devices (CPUs and GPUs). Using the fsycl-pstl-offload option with the Intel oneAPI DPC++/C++ Compiler, it exploits an experimental oneDPL capability.

To perform data parallel computations on heterogeneous devices, the oneDPL Parallel API offers the following execution policies:

Unseq for sequential performance

Par stands for parallel processing.

Combining the effects of par and unseq policies, par_unseq

The following three programs/sub-samples make up the code sample:

FileWordCount uses C++17 parallel techniques to count the words in a file.

WordCount determines how many words are produced using C++17 parallel methods), and

Various STL algorithms with the aforementioned execution policies (unseq, par, and par_unseq) are implemented by ParSTLTests.

The code example shows how to use the –fsycl-pstl-offload compiler option and standard header inclusion in the existing code to automatically offload STL algorithms called by the std:execution::par_unseq policy to a selected SYCL device.

You may offload your SYCL or OpenMP code to a specialized computing resource or an accelerator (such CPU, GPU, or FPGA) by using specific device selection environment variables offered by the oneAPI programming paradigm. One such environment option is ONEAPI_DEVICE_SELECTOR, which restricts the selection of devices from among all the compute resources that may be used to run the code in applications that are based on SYCL and OpenMP. Additionally, the variable enables the selection of sub-devices as separate execution devices.

The code example demonstrates how to use the ONEAPI_DEVICE SELECTOR variable to offload the code to a selected target device. OneDPL is then used to implement the offloaded code. The code is offloaded to the SYCL device by default if the pSTL offload compiler option is not used.

The example shows how to offload STL code to an Intel Xeon CPU and an Intel Data Center GPU Max. However, offloading C++ STL code to any SYCL device may be done in the same way.

What Comes Next?

To speed up SYCL kernels on the newest CPUs, GPUs, and other accelerators, get started with oneDPL and examine oneDPL code examples right now!

For accelerated, multiarchitecture, high-performance parallel computing, it also urge you to investigate other AI and HPC technologies that are based on the unified oneAPI programming paradigm.

Read more on govindhtech.com

#Intelonedpl#oneAPIDPCLibrary#sycl#Intel#onedpl#ParallelAPI#InteloneAPIDPCCompiler#SYCLomatic#fpga#IntelXeonCPU#cpu#oneapi#api#intel#technology#technews#news#govindhtech

0 notes

Text

Advanced Image Processing Assignment: Solving Object Recognition Challenges

Image processing is a crucial aspect of modern technology, driving advancements in fields like computer vision, medical imaging, and digital photography. University-level image processing assignments can be particularly challenging, requiring a blend of theoretical understanding and practical application. In this blog, we will explore a tough image processing assignment question related to object recognition, explain the underlying concepts in detail, and provide a step-by-step guide to solving it.

Sample Assignment Question: Object Recognition in Natural Scenes

Question:

You are given a dataset of images containing various natural scenes. Your task is to develop an image processing algorithm that can recognize and classify different objects (such as trees, buildings, cars, and people) within these images. Outline the steps you would take to preprocess the images, segment the objects, extract relevant features, and classify the objects. Discuss the challenges you might face and how you would address them.

Step-by-Step Guide to Answering the Question

Step 1: Image Acquisition

The first step is to acquire a dataset of images containing natural scenes. These images can be obtained from online repositories, or you can create your own dataset using a digital camera. Ensure that the images are in a format compatible with your image processing tools (e.g., JPEG, PNG).

Step 2: Preprocessing

Preprocessing is essential for enhancing image quality and making the subsequent steps more effective. Common preprocessing techniques include:

Noise Reduction: Use filters like Gaussian or median filters to reduce noise.

Contrast Adjustment: Enhance the contrast to make objects more distinguishable.

Grayscale Conversion: Convert the image to grayscale if color information is not critical for recognition.

Step 3: Segmentation

Segmentation involves dividing the image into meaningful segments to isolate objects. Techniques for segmentation include:

Thresholding: Convert the image to a binary format by setting a threshold value.

Edge Detection: Use edge detection algorithms like Canny or Sobel to identify object boundaries.

Region-Based Segmentation: Group pixels into regions based on their similarity.

Step 4: Feature Extraction

Feature extraction is crucial for identifying characteristics that can help in object classification. Common features include:

Edges: Identify the boundaries of objects.

Textures: Analyze the surface properties of objects.

Shapes: Recognize geometric shapes using techniques like Hough Transform.

Step 5: Classification

Classification involves using machine learning algorithms to categorize the objects based on the extracted features. Popular algorithms include:

Support Vector Machines (SVM): Effective for binary classification problems.

Convolutional Neural Networks (CNN): Highly effective for image classification tasks.

k-Nearest Neighbors (k-NN): Simple and effective for small datasets.

Challenges and Solutions

Challenge 1: Variability in Object Appearance Objects in natural scenes can vary widely in appearance due to changes in lighting, angle, and occlusion.

Solution: Use robust feature extraction methods and augment the training dataset with variations in object appearance.

Challenge 2: Background Clutter Natural scenes often have complex backgrounds that can interfere with object recognition.

Solution: Use advanced segmentation techniques to accurately isolate objects from the background.

Challenge 3: Real-Time Processing Processing images in real-time can be computationally intensive.

Solution: Optimize algorithms for speed and use efficient hardware like GPUs.

How We Help Students

At matlabassignmentexperts.com, we understand the complexities involved in university-level image processing assignments. Our team of experts provides comprehensive image processing assignment help, including:

Personalized Tutoring: One-on-one sessions to help you understand tough concepts.

Step-by-Step Solutions: Detailed guides and explanations for assignment questions.

Quality Assurance: Thorough review and feedback to ensure your work meets academic standards.

24/7 Support: Around-the-clock assistance to address any queries or concerns.

We are dedicated to helping you succeed in your studies and achieve your academic goals.

Conclusion

Object recognition is a challenging yet fascinating aspect of image processing. By understanding the key concepts and following a structured approach, you can tackle even the toughest assignments. Remember, the key steps involve image acquisition, preprocessing, segmentation, feature extraction, and classification. With the right techniques and support, you can excel in your image processing coursework and beyond.

For more personalized assistance and expert guidance, visit matlabassignmentexperts.com and let us help you achieve academic success in image processing and beyond.

#matlab assignment help#education#students#assignment help#university#image processing assignment help

0 notes

Text

Computer Graphics Assignment Help in Australia

In the technological field of visual computing known as computer graphics, professionals use computer programs to both create artificial visual images and integrate spatial and visual data gathered from the actual world.Every image or picture that anyone could see on a computer display is, in fact, a visual, because in the background, the CPU and the driver fix the parameters of every visible image. Also, different scientific operations are used to apply some changes to its parcels, like shape, size, stir, etc. This subfield of computer wisdom seems intriguing, but its complex languages make it more delicate to understand. Assignments related to computer graphics aren't easy to complete as there are multidimensional aspects that require in-depth knowledge of the subject and its colorful generalities, such as screen mapping, GPUs, CAD systems, handpicking methods, procedural modeling,etc. Types of computer graphics Interactive computer graphics It's a type of computer graphics that includes communication between a computer and a screen. Then, the stoner is given control( not completely) with an input device over an image. After entering the signals from the input device, the computer modifies the picture accordingly. Also, interactive computer graphics play a major part in our lives in a number of ways. For example, it aids an aeroplane's pilot in navigating. A flight simulator is created to help the aviators get trained on the ground. Computer Graphics That Aren't Interactive Unresistant computer graphics are a term used to describe situations when the user has no influence over the image. But it allows the reader to interact with the information by using numerous input biases. For illustration, screen saviors. For further information on the types of computer graphics, check out the stylish computer graphics assignment help from our graphic experts. Why choose our computer graphics assignment help? At assignmenter.net, scholars get support from stylish experts for their computer graphics assignments. An assignment on computer graphics is always a veritably grueling bone. A pen with applicable professional qualifications and years of experience in working as computer visual consultants, CAD experts, 3D vitality experts,etc. is employed to help a pupil seeking help with a computer graphics assignment. Scholars also take computer graphics courses online, and thus, the experts need to be effective enough to complete these types of assignments in every aspect so that the scholars can present their separate assignments confidently. Our Features Assignmenter.net provides complete support to each pupil who seeks help from our expert pens. An assignment writing job doesn’t end just by working on the problem and writing the program as demanded in an assignment. There are numerous other factors, like emendations in certain corridors, writing other assignments, giving it a professional look, etc., that are also inversely important for every pupil. Our experts believe in furnishing the stylish computer graphics assignment with a result as soon as possible for the scholars. Therefore, our platoon of computer visual experts at assignmenter.net remains active 24x7 to give the necessary support to the scholars at the quickest possible time.

https://assignmenter.net/computer-graphics-assignment-help-in-australia/

0 notes

Text

Customization at Your Fingertips: Tailoring Your Computing Experience with RDPextra's Fast GPU RDP Solutions

Enhancing Your Computing Power with RDPextra’s GPU Solutions

The Advantages of GPU-Powered RDP

Graphics Processing Units (GPUs) are renowned for his or her capability to handle intensive graphical duties successfully. By leveraging GPU-powered RDP solutions, users can experience greater performance and smoother operation, mainly while coping with image-intensive programs or obligations. This is specifically beneficial for customers requiring botting RDP abilities, in which seamless overall performance is crucial for automatic tasks. At RDPextra, we prioritize performance, ensuring that our GPU RDP answers meet the needs of numerous use instances, whether you’re looking to shop for RDP for gaming, design work, or botting functions.

Tailored Solutions for Botting RDP Needs

Botting, the procedure of automating obligations with the use of scripts or software, often requires reliable and high-overall performance computing assets. Whether it is for automating repetitive online duties or undertaking fact-scraping operations, getting access to a reliable botting RDP solution is critical. At RDPextra, we recognize the unique requirements of botting enthusiasts and professionals alike. Our GPU-powered RDP answers are optimized to deliver the rate and stability essential for jogging bots correctly. With our botting RDP offerings, you can streamline your workflow and maximize productivity without compromising on overall performance.

Seamless Access Anywhere, Anytime

Streamlined Setup and Configuration

Setting up and configuring RDP solutions can be a frightening assignment for inexperienced customers. However, with RDPextra’s consumer-pleasant interface and straightforward setup process, getting began is a breeze. Whether you’re a pro expert or a beginner user, our intuitive platform courses you through the setup process, making sure that you’re up and running very quickly. From choosing the proper GPU configuration to customizing your example consistent with your specific requirements, RDPextra simplifies the whole system of buying RDP and getting commenced with botting RDP solutions.

Secure and Reliable Infrastructure

Security is paramount about faraway computing answers, specifically for botting RDP, in which sensitive tasks and records may be concerned. At RDPextra, we prioritize the security and integrity of our infrastructure, imposing robust measures to guard your records and make certain a steady computing environment. With cease-to-give-up encryption, multi-element authentication, and proactive tracking, you can agree with RDPextra to maintain your information secure from unauthorized get admission to or malicious threats. Our reliable infrastructure ensures uptime and availability, permitting you to recognize your obligations without disturbing approximately downtime or interruptions.

Dedicated Support and Assistance

At RDPextra, we believe in imparting exceptional customer service and assistance to our users. Whether you’re encountering technical troubles, have questions about our services, or want guidance on optimizing your botting RDP setup, our group of professionals is right here to help. With activated responses and personalized assistance, we try to make certain that your revel in with RDPextra is seamless and hassle-unfastened. We’re committed to supporting you in making the most of our GPU-powered RDP answers, whether or not you’re a seasoned professional or a first-time person looking to buy RDP for botting purposes.

Conclusion

In an international wherein computing power is paramount, RDPextra’s GPU-powered RDP solutions offer extraordinary performance and flexibility. Whether you’re looking to buy RDP for gaming, design paintings, or botting functions, our fast GPU RDP solutions cater to diverse needs with seamless overall performance and reliability. With a streamlined setup, stable infrastructure, and dedicated help, RDPextra empowers users to tailor their computing revel in consistent with their particular necessities. Experience the convenience and efficiency of far-flung computing with RDPextra — your gateway to custom-designed computing power at your fingertips.

0 notes

Text

NinjaTech AI & AWS: Next-Gen AI Agents with Amazon Chips

AWS and NinjaTech AI Collaborate to Release the Next Generation of Trained AI Agents Utilizing AI Chips from Amazon.

The goal of Silicon Valley-based NinjaTech AI, a generative AI startup, is to increase productivity for all people by handling tedious activities. Today, the firm announced the release of Ninja, a new personal AI that moves beyond co-pilots and AI assistants to autonomous agents.

AWS Trainium

Building, training, and scaling custom AI agents that can handle complex tasks autonomously, like research and meeting scheduling, is what NinjaTech AI is doing with the help of Amazon Web Services’ (AWS) purpose-built machine learning (ML) chips Trainium and Inferentia2, as well as Amazon SageMaker, a cloud-based machine learning service.

These AI agents integrate the potential of generative AI into routine activities, saving time and money for all users. Ninja can handle several jobs at once using AWS’s cloud capabilities, allowing users to assign new tasks without having to wait for the completion of ongoing ones.

Inferentia2

“NinjaTech AI has truly changed the game by collaborating with AWS’s Annapurna Labs.” NinjaTech AI’s creator and CEO, Babak Pahlavan, said, “The flexibility and power of Trainium & Inferentia2 chips for AWS reinforcement-learning AI agents far exceeded expectations: They integrate easily and can elastically scale to thousands of nodes via Amazon SageMaker.”

“With up to 80% cost savings and 60% increased energy efficiency over comparable GPUs, these next-generation AWS-designed chips natively support the larger 70B variants of the most recent, well-liked open-source models, such as Llama 3. Apart from the technology per se, the cooperative technical assistance provided by the AWS team has greatly contributed to development of deep technologies.

Large language models (LLMs) that are highly customized and adjusted using a range of methods, including reinforcement learning, are the foundation upon which AI agents function, enabling them to provide accuracy and speed. Given the scarcity and high cost of compute power associated with today’s GPUs, as well as the inelasticity of these chips, developing AI agents successfully requires elastic and inexpensive chips that are specifically designed for reinforcement learning.

With the help of its proprietary chip technology, which allows for quick training bursts that scale to thousands of nodes as needed every training cycle, AWS has overcome this obstacle for the AI agent ecosystem. AI agent training is now quick, versatile, and reasonably priced when used in conjunction with Amazon Sage Maker, which provides the option to use open-source models.

AWS Trainium chip

Artificial intelligence (AI) agents are quickly becoming the next wave of productivity technologies that will revolutionize AWS ability to work together, learn, and work. According to Gadi Hutt, senior director of AWS’s Annapurna Labs, “NinjaTech AI has made it possible for customers to swiftly scale fast, accurate, and affordable agents using AWS Inferentia2 and Trainium AI chips.” “They’re excited to help the NinjaTech AI team bring autonomous agents to the market, while also advancing AWS’s commitment to empower open-source ML and popular frameworks like PyTorch and Jax.”

EC2 Trainium

Amazon EC2 Trn1 instances from Trainium were used by NinjaTech AI to train its models, while Amazon EC2 Inf2 instances from Inferentia2 are being used to serve them. In order to train LLMs more quickly, more affordably, and with less energy use, Trainium drives high-performance compute clusters on AWS. With up to 40% higher price performance, models can now make inferences significantly more quickly and cheaply thanks to the Inferentia2 processor.

AWS Trainium and AWS Inferentia2

In order to create really innovative generative AI-based planners and action engines which are essential for creating cutting-edge AI agents they have worked closely with AWS to expedite this process. Pahlavan continued, “AWS decision to train and deploy Ninja on Trainium and Inferentia2 chips made perfect sense because they needed the most elastic and highest-performing chips with incredible accuracy and speed.” “If they want access to on-demand AI chips with amazing flexibility and speed, every generative AI company should be thinking about AWS.”

By visiting myninja.ai, users can access Ninja. Four conversational AI agents are now available through Ninja. These bots can assist with coding chores, plan meetings via email, conduct multi-step, real-time online research, write emails, and offer advice. Ninja also makes it simple to view side-by-side outcome comparisons between elite models from businesses like Google, Anthropic, and OpenAI. Finally, Ninja provides users with an almost limitless amount of asynchronous infrastructure that enables them to work on multiple projects at once. Customers will become more effective in their daily lives as Ninja improves with each use.

Read more on Govindhtech.com

#NinjaTechAI#aws#aichips#ai#Amazon#AWSTrainium#EC2Trainium#llm#AmazonEC2#OpenAI#technology#technews#news#govindhtech

0 notes

Text

How To Choose the Right PC Components for Your Gaming Computer

Are you someone who loves gaming and dreams of owning a custom-built gaming computer? Building your gaming rig from the ground up can be an incredibly satisfying experience. It allows you to personalize every aspect to perfectly match your gaming preferences. The ability to customize every aspect to suit your specific gaming needs is both confusing and exciting, especially with the wide range of PC components available in the market.

In this blog, we will guide you through selecting the perfect PC components for your gaming setup. Whether you're an experienced builder looking to upgrade or a beginner starting from scratch, our step-by-step advice will help you make well-informed decisions to buy the right PC Components for Gaming Computer.

From the heart of your gaming PC, the central processing unit (CPU), to the dazzling graphics-rendering capabilities of the graphics card (GPU), Motherboard, and everything in between, we'll clarify each component and help you understand how they work together to create a harmonious gaming PC.

What Components Do You Need for a Gaming PC?

Gaming computers offer limitless possibilities when it comes to price and performance. But with so many options available, which components are truly essential for a great gaming experience? In the world of gaming, performance is key, and the right combination of components can make all the difference in delivering smooth and immersive gameplay.

In this guide, we will break down the must-have gaming PC components, starting from the essential necessities to exploring high-end options that can give you the edge in today's most demanding games. So, let's dive in and uncover the secrets to building your dream gaming PC! Here are the key components you should consider:

Central Processing Unit (CPU)

The CPU is one of the most important components of a gaming PC. Its importance lies in the fact that it acts as the "brain" of the computer. It handles all the calculations and tasks necessary for running games and other applications. Look for a powerful multicore processor from reputable brands like Intel or AMD.

Here are some considerations to keep in mind when buying the right CPU for your gaming PC:

Performance: Look for CPUs with high clock speeds and multiple cores. For gaming, a quad-core or hexa-core CPU is generally sufficient, but higher core counts can be beneficial for multitasking and future-proofing.

Compatibility: Ensure the CPU is compatible with the motherboard you intend to use. Check the socket type and the motherboard's chipset to ensure they match.

Brand and Generation: Popular CPU brands for gaming are Intel and AMD. Consider the latest generation CPUs as they often offer improved performance and efficiency.

Cooling Requirements: High-performance CPUs can generate a lot of heat, so consider investing in a good-quality cooling solution to keep temperatures in check.

Budget: CPUs come in various price ranges. Set a budget and find a CPU that offers the best value for your gaming needs.

User Reviews and Benchmarks: Before purchasing, read user reviews and look at gaming benchmarks to see how the CPU performs in real-world gaming scenarios.

Future Upgradability: Consider the upgrade options available for the CPU's platform. CPUs with longer-lasting socket compatibility offer more opportunities for future upgrades without changing the motherboard.

Graphics card (GPU):

The GPU, also known as the graphics card, is crucial for rendering visuals in games. It determines the quality and frame rates you'll get. Invest in a dedicated gaming GPU with great VRAM for optimal performance. To ensure you buy the right graphics card, follow these considerations that guarantee an informed and satisfying decision:

Assign Your Budget Wisely: Define a clear budget for your Graphics card purchase.

Understand Your Gaming Requirements: Identifying your gaming needs will help you find a GPU that matches your expectations.

Verify Compatibility: Ensure that the GPU is compatible with your existing system. Verify the PCIe slot and power supply connectors to confirm they match the GPU's requirements.

Conduct Thorough Research on GPU Models: Explore various GPU models available in the market. Rely on expert reviews, benchmarks, and user feedback to gauge their performance and reliability.

Future-Proof Your Investment: Consider your future upgrade plans. If you desire a GPU capable of handling upcoming games and remaining relevant for several years, invest in a higher-end model with superior performance and more VRAM.

Pay Attention to VRAM: Look for a GPU with good Video RAM (VRAM) to handle high-resolution textures and settings. A minimum of 4GB is recommended for 1080p gaming, while 6GB or more is ideal for 1440p or 4K gaming.

Verify Connectivity Options: Ensure the GPU has the necessary display outputs to connect to your monitor or multiple monitors if required.

Consider Cooling Factors: Consider the cooling solution and physical size of the GPU. Custom cooling designs can improve performance and reduce noise.

Pay attention to the specs of the graphics card itself: Things like VRAM, memory clock speed, and power consumption will all affect performance, so make sure to compare these between different models before making your final decision.

Random Access Memory (RAM)

RAM plays an important role in a gaming PC because it directly impacts the system's performance and the gaming experience.

Here's what you need to consider when choosing RAM for a Gaming PC

Motherboard Compatibility: Ensure the RAM is compatible with your motherboard. Check the motherboard's manual or manufacturer's website for the supported RAM types and speeds.

Capacity: For modern gaming, 8GB of RAM is the minimum recommended capacity. However, 16GB is now considered the sweet spot for most gamers, providing ample headroom for multitasking and future-proofing.

SDRAM and DDR4: Opt for DDR4 RAM, as it is the latest standard and offers better performance compared to older DDR3 or SDRAM.

Speed (Frequency): RAM speed is measured in megahertz (MHz). Higher RAM speeds can offer marginal performance improvements in certain games, but the difference is not always significant. Aim for at least DDR4-3000 or higher for optimal gaming performance.

Timing (CAS Latency): CAS Latency measures the delay between the CPU requesting data from RAM and the actual data delivery. Lower CAS Latency values provide slightly better performance, but the difference is often not noticeable in real-world gaming.

Form Factor: Select the appropriate form factor to fit your system. Desktops usually use standard DIMMs, while laptops and small form factor PCs may require SODIMMs.

Storage (SSD/HDD)

Both an SSD (Solid State Drive) and an HDD (Hard Disk Drive) are useful in a gaming PC. An SSD provides faster loading times, while an HDD offers more affordable mass storage. It directly impacts game loading times, overall system responsiveness, and the gaming experience. When choosing between SSD (Solid State Drive) and HDD (Hard Disk Drive) storage, here are the key factors to consider:

Speed and Performance:

SSD (Solid State Drive): SSDs offer lightning-fast read and write speeds, significantly reducing game loading times and system boot-up times. They provide a smoother gaming experience with minimal lag and faster level transitions.

HDD (Hard Disk Drive): HDDs are slower than SSDs but are more affordable and offer higher storage capacities. While they are suitable for storing large game libraries, they may result in slightly longer loading times compared to SSDs.

Capacity:

SSD: A 500GB or 1TB SSD is recommended for a gaming PC. This capacity allows you to install your favorite games and operating system, ensuring quick access to frequently played titles.

HDD: If you have a vast game library or require mass storage for other files, consider a 2TB or higher HDD for additional space at a more affordable price per gigabyte.

Motherboard

The motherboard serves as the foundation of your PC, connecting all the components together. Ensure it's compatible with your chosen CPU and has enough expansion slots for future upgrades.

To make an informed decision, consider the following essential factors:

Form Factor: The motherboard's form factor determines its physical size and compatibility with your PC case. Common form factors include ATX, MicroATX, and Mini-ITX. ATX motherboards offer more expansion slots, while Mini-ITX boards are compact and suitable for small builds.

Socket Type: The socket type must match your chosen CPU. Popular socket types for gaming PCs include LGA1151 and AM4. Ensure the motherboard's socket aligns with your processor to avoid compatibility issues.

Chipset: The chipset dictates the features and capabilities of the motherboard. Look for essential gaming features like support for PCIe 4.0, M.2 slots for fast storage, and USB 3.2 Gen 2 ports for high-speed data transfer.

RAM Support: Consider the maximum RAM capacity the motherboard can handle and the supported RAM speed. For gaming, a minimum of 16GB of RAM is recommended, with higher speeds providing better performance.

Expansion Slots: The number and type of expansion slots are essential for connecting your graphics card and other peripherals. Ensure the motherboard has at least one PCIe x16 slot for the graphics card and additional slots for future upgrades.

Other features: There are a number of other features that you can consider when choosing a motherboard for a gaming PC, such as overclocking support, integrated Wi-Fi, and sound card support. These conveniences can enhance your overall gaming and PC usage experience.

Brand and Reliability: Opt for reputable motherboard manufacturers known for producing high-quality and reliable products. Brands like ASUS motherboard, MSI, Gigabyte, and ASRock are well-regarded in the gaming community.

Future-Proofing: Consider future upgrades and technologies. Choosing a motherboard that supports the latest CPU generations and has room for expansion will keep your system relevant for a longer time.

Power Supply Unit (PSU)

A reliable PSU is essential to deliver stable power to all components. Choose a PSU with enough wattage to handle your system's power requirements and consider efficiency ratings.

Cooling Solution:

Gaming PCs can generate a lot of heat, so proper cooling is crucial to avoid overheating. Invest in a CPU cooler and ensure your case has good airflow.

Computer Case:

Choose a case that fits your components, provides good cable management, and has proper ventilation for cooling.

Operating System (OS):

Select an operating system like Windows 10 or the latest version of your preferred OS that supports your games and software.

Gaming Accessories:

Don't forget gaming accessories like a gaming mouse, keyboard, and monitor. These can enhance your gaming experience further.

Conclusion

We hope that this guide to choosing the right Gaming PC Components f has been informative and helpful. Make sure that you select the best Computer parts to ensure that your gaming experience is smooth and lag-free, so don't skimp when it comes to building a top-notch gaming PC.

To buy Computer components online, you can consider visiting popular online retailers or computer accessories store like Amazon, Easyshoppi, and Newegg. They offer a wide selection of PC components from reputable brands.

Before making any purchase, ensure that the components you choose align with your gaming PC's requirements, budget, and future upgrade plans. Compare prices, read reviews, and take advantage of any promotions or discounts available to get the best value for your investment.

Remember, building a gaming PC is an exciting endeavor, and with the right components, you can create a powerful and personalized gaming rig that delivers an exceptional gaming experience. If you are still confused or need advice on which components are right for you, Email us with any questions or inquiries or call +91-8360347878. We would be happy to answer your questions and we’ll be in touch with you as soon as possible.https://www.easyshoppi.com/contact-us/

#Gaming Accessories Online#buy gaming accessories online#gaming pc online#Computer Accessories Store#Computer Accessories Shop#computer accessories shop online#Pc Accessories Online Store#Buy Computer Components Online#Buy Computer Accessories Online#Computer Accessories Online#gaming computer online#online gaming accessories store#buy pc accessories online#pc gaming accessories online#online computer Store#gaming accessories online

0 notes

Text

The real potential of small Ai agents.

I recently conducted an experiment using a small AI model and fine-tuned it on a high-quality dataset of instructions and dialogue from a large model.

The goal was to explore the potential of AI beyond traditional coding work and share my findings with you.

For this experiment, I used the Opt-335M model and removed all questions related to programming and code blocks from the dataset to make it more general , and while the model's performance didn't meet my initial expectations, it was still a significant step forward in showcasing what can be accomplished if we expand our perspectives.

Although I trained the model on only about 40MB of dialogue, instructions, and questions from a 500MB dataset, it was exciting to see it generate content and reason.

More training data would have improved the results, but time and resource constraints meant this was the best I could do. Nevertheless, the model performed remarkably well given the limited data.

My primary goal was to develop an AI model that could run on any device, without the need for massive GPUs or M1 MacBooks. I aimed to explore AI capabilities, generate content, and gain inspiration without API restrictions and safety layers while having fun instructing the model.

https://s3-us-west-2.amazonaws.com/secure.notion-static.com/a6e69dd1-3ee0-4ae5-b1ff-c9e85577512e/Untitled.png

“emma” during training evaluation , notice “speaker=emma>” is the Ai turn. it remembered the instructions and the job assigned to it.

Do you really need OpenAi? | Do you really need Alpaca 7B? | Do you really need vicuna 13B?

Keep. things. simple.

In the age of large language models with billions of parameters, we often overlook the fact that AI can also be helpful in everyday tasks like writing emails or generating ideas. It begs the question: do we really need such massive models for simple tasks?

While the open-source community has made great strides in making AI accessible, I believe there is still more work to be done. Instead of creating more specialized models that are distilled from ChatGPT to write code or perform specific tasks, we should focus on making AI capabilities available to everyone.

https://s3-us-west-2.amazonaws.com/secure.notion-static.com/f0b0e086-a9b3-4899-bef0-aadb11da0717/Untitled.png

Overall, my experiment showed that with the right perspective and training data, we can expand the potential of AI and make it more accessible to everyone. However, we must also acknowledge the challenges and limitations that come with this field and work towards addressing them.

The idea behind this experiment is to develop an AI model with a size range of 355M-1B, This would make it possible to use the model on personal computers without the need for massive GPUs or extensive datasets.

By removing safety layers and guidelines, this model would allow for greater exploration of AI's true potential in everyday tasks, such as chatting, generating ideas, editing text, and writing emails.

Moreover, with the rise of AI personas, we can create more engaging and interactive experiences for users. However, we are currently wasting valuable dataset tokens on coding prompts and answers, which could be better utilized in expanding the scope of AI's capabilities.

Entrepreneurs! — GPU! — BOOH!

I believe that the real potential of AI is being hidden from individuals and small businesses, as they often assume that such capabilities require expensive resources and powerful models like ChatGPT and GPT-4. However, as demonstrated by this experiment, even with limited resources and a small dataset, a smart and helpful AI agent can be developed and used to organize folders, assist with scheduling, and even psychoanalysis.

While larger models like ChatGPT and GPT-4 have their benefits .. it's essential to remember that they are not the only means of achieving AI capabilities , by exploring the possibilities of smaller models, we can unlock the limitless potential of AI in our daily lives.

In conclusion,

This experiment was a fun and exciting exploration of the possibilities of AI beyond coding. By developing accessible and helpful AI models, we can empower individuals and businesses with new tools to improve their productivity, creativity.

AI doesn't have to be complex or require massive datasets or GPUs. By creating accessible and helpful AI models, we can all explore the possibilities of creating our own personal AI sidekick. With the right training data and perspective, anyone can develop an AI model that can assist with everyday tasks.

We should all be looking towards creating the next big thing, which could be the combination of You and AI. By embracing the potential of AI and exploring its possibilities beyond coding, we can create new and exciting solutions to everyday problems, and improve our overall quality of life.

0 notes

Text

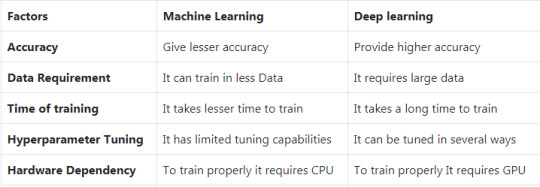

Machine Learning vs Deep Learning differences you should know

Right now, specialists will disclose to you about Machine learning versus Deep learning in detail. To think about Machine learning and profound learning start with this.

Both DL and ML are types of Artificial knowledge. At the end of the day, You can likewise say that DL is a specific sort of ML. Both profound learning and AI start with training and test models and information and experience an advancement technique to decide the loads that make the model best match the information.

For this reason, Both profound learning and Machine learning can deal with numeric and non-numeric issues, despite the fact that there are different application territories. For example, language interpretation and item acknowledgment. Though models of profound learning will in general give preferable fits over the models of AI. Follow this post for this better comprehension of the contrast between Machine learning versus Deep learning.

What is Machine Learning(ML)?

ML is an exceptionally valuable instrument for clarifying, learning and perceiving an example in the information. One of the main roles behind ML is that the PC can be set up for errands computerization that would be inconceivable or thorough for people. The reasonable rupture from the customary understanding is that ML can settle on decisions with least human impedance.

Likewise, ML utilizes information to help a calculation that can get familiar with the association between the yield and the information. Likewise, when the machine finishes learning, it can prognosticate the worth or the class of the new information point.

What is Deep Learning(DL)?

DL is PC programming that mimics the neurons organize in a cerebrum. Profound learning is a subset of ML and the explanation it is called DL is that it plays out the utilization of profound neural systems. The machine utilizes a few layers to concentrate from the information.

The model profundity is depicted by the different layers in the model. Profound learning is the present best in class as far as Artificial Intelligence. In profound learning, the learning time frame is done inside a neural system. A neural system is where the layers are heaped on one another. Any Deep Neural Network will incorporate 3 layers types:

Input Layer

Hidden Layer

Output Layer

Difference between Machine learning vs Deep learning.

Comparison of Deep Learning vs Machine Learning.

Now you have a basic understanding of Deep Learning and Machine Learning, we will take some essential points and do the comparison of both techniques.

Data dependencies

The most critical contrast in conventional ML and DL is its presentation as the size of information enhancements. At the point when the information is short, calculations of DL don't work that well. This is on the grounds that calculations of DL need a tremendous information add up to know it flawlessly. While, calculations ML with their carefully assembled rules controls right now.

Hardware dependencies

Calculations of Deep adapting significantly relying on top of the line machines, rather than calculations of ML, which can chip away at low-end machines. This is on the grounds that the requests of profound learning calculations join GPUs which are its working basic parts. DL calculations basically do a colossal measure of activities increase of lattice. These activities can be viably upgrade utilizing a GPU.

Feature engineering

Highlight designing is a strategy for placing space data into the creation of highlight extractors to diminish the information trouble and make models increasingly observable to contemplating calculations to work. This procedure is costly and troublesome as far as skill and time.

In ML, the most valuable highlights require to be perceived by a pro and afterward hand-coded according to the information type.

For example

Highlights can be position, structure, direction, shape and pixel esteem. Most ML calculation's exhibition relying on how precisely the highlights perceive and expel.

From information calculations of DL attempt to concentrate significant level highlights. This is an exceptionally one of a kind piece of Deep Learning and a huge stride in front of ML. In this manner, profound learning diminishes the activity of creating inventive element extractors for each trouble.

Problem Solving approach

When settling an issue with the utilization of a conventional ML calculation. Likewise, Recommend to isolate the issue into a few areas, answer them independently and interface them to get the outcome. DL interestingly promoters to comprehend the inquiry start to finish.

Execution time

Normally, a calculation of DL takes a long preparing time. This is on the grounds that in a profound learning calculation there are different parameters that preparation them takes longer than typical. Then again ML around takes an a lot shorter preparing time, shifting from certain seconds to certain hours.

Where is Deep Learning and Machine Learning being implement.

Computer Vision: for applications like to identify vehicle number plate and for recognizing faces.

Data Retrieval: It is used for purposes like search engines, both image search, and text search.

Online Advertising, etc

Marketing: It is used for applications like automated email marketing.

Medical Diagnosis: for applications like identification of cancer, anomaly detection

Natural Language Processing: it is used for applications like photo tagging, sentiment analysis

Can one learn deep learning without ML?

Profound learning needn't bother with a lot of premonition in various AI strategies. So you can essentially begin learning Deep learning without learning those procedures. In any case, you will in any case require to get a decent handle on the kinds of issues profound learning is well-appropriate to reply. What's more, how to comprehend those outcomes.

Conclusion:

As a result, Deep learning and Machine learning are two separate compose things of the same common core of Artificial Intelligence. They are also good to use in several situations yet one should not practice over the other unless there is an absolute need. In this article, we had a high-level overview and comparison between deep learning and machine learning techniques. If you want machine learning assignment help

or any machine learning assignment help within a given deadline. Our experts are available to help you.

#best assignment experts#Programming Assignment Helper Programming Homework Helper#statistics help online statistics assignment help#best services

1 note

·

View note

Text

GPU Computing Assignment Homework Help

https://www.matlabhomeworkexperts.com/gpu-computing-assignment-help-matlab.php

A Graphics Processing Unit (GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles. GPU computing is the use of a GPU to do general purpose scientific and engineering computing. Its introduction opened new doors in the areas of research and science. Due to their massive-parallel architecture, using GPUs enables the completion of computationally intensive assignments much faster compared with conventional CPUs.

We at Matlab Homework Experts provide expert help for Graphics Processing Unit assignment or Graphics Processing Unit homework. Our Graphics Processing Unit online tutors are highly talented in providing college and university level Graphics Processing Unit assignment and Graphics Processing Unit homework help to students.

#GPU Computing Assignment Homework Help#GPU Computing Assignment Homework#GPU Computing Assignment help#GPU Computing homework help#GPU Computing Assignment Homework experts#GPU Computing#GPU Computing Assignment solution#online GPU Computing Assignment Homework Help#help with GPU Computing Assignment Homework Help#GPU Computing projec help

0 notes

Text

Sims 2 Pink Flash & Crash FIX!

Hey penguins, wanted to follow up with my previous post about my Sims 2 crashing and the tricks I used to get it working again! Since applying them, I haven’t had one crash or purple flashing since! (At this point, I have played the game several hours, switched households multiple times and loaded a ton of lots to make sure the issues are gone)

============================================================= Tip#1: Make sure to check that your Graphics Card is listed in the Video Cards.sgr files in your Sims 2 installation folder! If not, use the Graphics Rules Maker , add your GPU to the list and double-check that it HAS been added there (I had problems with this and had to do it manually in the end!). Make sure to copy the new Graphics Rules AND Video Cards files to both folders in the installation location (Config and CSConfig).

Tip #2: Make sure that you have to correct texture memory amount assigned for your game! If you haven’t already, run DxDiag on your computer ( https://support.microsoft.com/en-us/help/4028644/windows-open-and-run-dxdiagexe ) , click “Save all info” on the bottom and save it on your desktop. Open and check your Display Memory under “Display Devices”. That Display Memory is the amount you need to change for your Graphic Rules-files. See Leefish post for more info: http://www.leefish.nl/mybb/showthread.php?tid=7909

Tip #3: If you have applied the “4 gig patch”, make sure that it is working properly. Check your Documents > EA Games > The Sims 2 > Logs folder and open a file called “COMPUTERNAME-config-log”. If the memory under “Machine info” (top of the list) is anything less than 4000MB (mine says 4096 MB), the patch hasn’t been fully applied. Jessa shows in this VIDEO how to download AND fix the patch: https://youtu.be/-0iwuLZyjMg

Tip #4: Especially if you are playing on Windows 10, make sure to do the Memory Allocation Fix that Jessa shows in the video above! You NEED this! This fix also helps Windows 7 users!!

Tip #5: (Not sure if this is necessary after applying #4, but I did it before I fixed the memory leak issue and didn’t have any crashes after that either) Make sure your Virtual Memory amount is set high enough in Control Panel > System > Advanced settings > Advanced tab > Performance settings > Advanced > Virtual Memory. Set the custom amount for your main drive to be 25,000-30,000 and click “Set”. === Thanks so much to Gina for this tip!===

Check out Jessa’s post on ModTheSims for more links and important information: http://modthesims.info/showthread.php?t=610641

I’m listing here all the tricks I did both for you guys and for myself aswell, in case I need to go through this whole thing again at some point in the future (after switching Graphics Cards, which thankfully won’t happen in a few years!). Hopefully it’ll be useful and helpful for you guys!

Special thanks to Jessa, Gina and everyone on Leefish forums for all the useful information and tips on how to fix the graphical problems!

Now, on to more Sims 2 livestreams!!! ============================================================== EDIT// Even after applying all the tips above, the flashing pink glitch and the crashing that followed came back to my game after few days. If you continue to have these issues despite of applying the changes above, try these methods! (My game has FINALLY stopped crashing and flashing after I tried these and made permanent changes to the game itself!) -------- IMPORTANT! Watch THIS VIDEO to learn about the flashing pink issue and apply one or more of these tricks: Tip#6: Use “boolProp UseShaders false” to stop the flashing (I’ve added this to my userStartup.cheat file so it will stay on automaticly, especially since I have certain neighborhoods that will always flash pink in the neighborhood view... *cough* Clayfield *cough). Tip#7: Delete the game thumbnails from your Documents > EA Games > The Sims 2 > Thumbnails. -------- If you start getting the flashing pink glitch while playing on a lot (can happen especially if you’ve been running the game for a long time), save and exit the game and delete all thumbnails before restarting the game. I have to delete the thumbnails every time before I load up the game. Tip#8: Change your settings in-game to match the ones shown in the video above! (Shadows: off, Lighting: medium, view distance: medium) This will help prevent any further issues. I’m running Win10 with Nvidia GTX 1070 graphics card and Intel i7-8700 processor, and disabling the shaders AND deleting thumbnails regularly has proven to be the only way of me playing the game without random crashes because of the flashing pink texture issues.

2K notes

·

View notes

Text

What gets me about all of this is what we could be using all that computational power for besides Enriching Captain Planet Villains. Like, okay, I'm getting into something called pose estimation in animal behavior. I can take video of two animals interacting and assign a machine learning algorithm to look at every frame of that video and tell me, in three dimensions if I use a few cameras at once, where each joint in the animal's body is in space. That gives me a ton of non-invasive space to ask complicated questions about animal social interactions without having to alter anything about those interactions or compress the potential behavior space to make data scoring easier. I find that really exciting, but the cost of it is that it takes a (to me) mind boggling amount of computational capacity to store that video and run these calculations on it. I'm in the process of trying to purchase a research machine that will be able to store a few years' worth of raw data for ten years, so anyone who wants can check my work.

A) that GPU shortage? That also affects machines like this, because these days most research and data-crunching software is written to parallelize processing on the GPU card as well as the CPU card. The program I'm currently using for this stuff requires a fairly high end NVIDIA GPU to run in any kind of reasonable time frame.

B) the machine I'm trying to learn how to build will cost something like $10,000. It is not cheap to devise that level of storage and computational capacity.

C) in terms of computational capacity, just one shelf on those racks inside those rows and rows of Bitcoin mining rigs would almost certainly be enough and more than enough to let me and researchers like me execute these kinds of research techniques that would help us better understand both other animals and ourselves. I'm doing that work with neuroscience researchers, and I can think of applications that would teach us all kinds of things about the way that moving our bodies through the world can vary, break, and be used.

Or, hell, let's not make this about me. Let's imagine that our society chose to funnel that level of resources into a robust series of gaming rigs that improves global access to a new form of storytelling. Think about what we could collectively do in terms of art and experience and telling one another stories if we had the time and space to use computers to tell one another better and bigger stories. How could we use that to inform our lives and imagine a better world?

And all that just assumes we don't change our mining practices to be more sustainable, or streamline recycling initiatives to make it easier and more likely to save the materials in broken tech products so they don't get wasted in landfills, or pass laws forcing new tech to be repairable and replacable so that we don't have to buy a whole new phone with whole new chips every two fucking years.

There's so much possibility bound up in that fucking Bitcoin mine. And it's getting spent in service of making some rich fuckers who already raw-dogged the global economy once even richer as they scam every idiot they can trick into believing that scamming the next guy down the line is inevitable.

Thanks! I hate it.

155K notes

·

View notes